About xAI4Biometrics

The xAI4Biometrics Workshop at WACV 2022 aims at promoting a better understanding, through explainability and interpretability, of currently common and accepted practices in several and varied applications of biometrics. These applications, in scenarios comprising identity verification for access/border control, watching lists surveillance, anti-spoofing measures embedded in biometric recognition systems, forensic applications, among many others, affect the daily life of an ever-growing population. xAI4Biometrics is organised by INESC TEC and co-organised by the European Association for Biometrics (EAB).

Important Info

Where? At WACV 2022, in Waikoloa HI, USA

When? 4 January 2022 (Afternoon)

Abstract Submission: 4 October 2021

Paper Submission: 25 October 2021

Author Notification: 15 November 2021

Camera Ready & Registration (Firm deadline): 19 November 2021

Keynote Speakers

Abstract: Scientific fields that are interested in faces have developed their own sets of concepts and procedures for understanding how a target model system (be it a person or algorithm) perceives a face under varying conditions. In computer vision, this has largely been in the form of dataset evaluation for recognition tasks where summary statistics are used to measure progress. While aggregate performance has continued to improve, understanding individual causes of failure has been difficult, as it is not always clear why a particular face fails to be recognized, or why an impostor is recognized by an algorithm. Importantly, other fields studying vision have addressed this via the use of visual psychophysics: the controlled manipulation of stimuli and careful study of the responses they evoke in a model system. In this talk, we suggest that visual psychophysics is a viable methodology for making face recognition algorithms more explainable, including the ability to tease out bias. A comprehensive set of procedures is developed for assessing face recognition algorithm behavior, which is then deployed over state-of-the-art convolutional neural networks and more basic, yet still widely used, shallow and handcrafted feature-based approaches.

About the Speaker: Walter J. Scheirer, Ph.D. is an Assistant Professor in the Department of Computer Science and Engineering at the University of Notre Dame. Previously, he was a postdoctoral fellow at Harvard University, with affiliations in the School of Engineering and Applied Sciences, Dept. of Molecular and Cellular Biology and Center for Brain Science, and the director of research & development at Securics, Inc., an early stage company producing privacy-enhancing biometric technologies. He received his Ph.D. from the University of Colorado and his M.S. and B.A. degrees from Lehigh University. Dr. Scheirer has extensive experience in the areas of computer vision, machine learning and image processing. His current research is focused on media forensics and studying disinformation circulating on social media.

Abstract: In recent years we have witnessed increasingly diverse application scenarios of AI systems in our daily life, despite the societal concerns on some of the weakness of the technology. A sustainable deployment and prospects of AI systems will rely heavily on the ability to trust the recognition process and its output. As a result, in addition to striving for higher accuracies, trustworthy AI has become an emerging research area. In this talk, we will take face recognition, a research field specialized on recognizing individuals based on the faces, as an example of AI subfield, and discuss various research problems in trustworthy face recognition, including topics such as security (e.g., presentation attack detection and forgery detection), biasness, adversarial robustness, and interpretable recognition. We will present some of the recent works on these topics and discuss the remaining issues warrant future research.

About the Speaker: Xiaoming Liu earned his Ph.D degree in Electrical and Computer Engineering from Carnegie Mellon University in 2004. He received a B.E. degree from Beijing Information Technology Institute, China and a M.E. degree from Zhejiang University, China in 1997 and 2000 respectively, both in Computer Science. Prior to joining MSU, he was a research scientist at the Computer Vision Laboratory of GE Global Research. His research interests include computer vision, pattern recognition, machine learning, biometrics, human computer interface, etc.

Call for Papers

The xAI4Biometrics Workshop welcomes submissions that focus on biometrics and promote the development of: a) methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities; b) quantitative methods to objectively assess and compare different explanations of the automatic decisions; c) methods to generate better explanations; and d) more transparent algorithms.

Interest Topics

The xAI4Biometrics Workshop welcomes works that focus on biometrics and promote the development of:

- Methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities;

- Quantitative methods to objectively assess and compare different explanations of the automatic decisions;

- Methods and metrics to study/evaluate the quality of explanations obtained by post-model approaches and improve the explanations;

- Methods to generate model-agnostic explanations;

- Transparency and fairness in AI algorithms avoiding bias;

- Interpretable methods able to explain decisions of previously built and unconstrained (black-box) models;

- Inherently interpretable (white-box) models;

- Methods that use post-model explanations to improve the models’ training;

- Methods to achieve/design inherently interpretable algorithms (rule-based, case-based reasoning, regularization methods);

- Study on causal learning, causal discovery, causal reasoning, causal explanations, and causal inference;

- Natural Language generation for explanatory models;

- Methods for adversarial attacks detection, explanation and defense ("How can we interpret adversarial examples?");

- Theoretical approaches of explainability (“What makes a good explanation?”);

- Applications of all the above including proof-of-concepts and demonstrators of how to integrate explainable AI into real-world workflows and industrial processes.

The workshop papers will be published in IEEE Xplore as WACV 2022 Workshops Proceedings and will be indexed separately from the main conference proceedings. The papers submitted to the workshop should follow the same formatting requirements as the main conference. For more details and templates, click here.

Don't forget to submit your paper by 25 October.

Programme

Opening Session

Walter Scheirer Notre Dame University, USA

Keynote Talk: Visual Psychophysics for Making Face Recognition Algorithms More Explainable

Oral Session I

- "Explainability of the Implications of Supervised and Unsupervised Face Image Quality Estimations Through Activation Map Variation Analyses in Face Recognition Models" - Biying Fu, Naser Damer

- "Interpretable Deep Learning-Based Forensic Iris Segmentation and Recognition" - Andrey Kuehlkamp, Aidan Boyd, Adam Czajka, Kevin Bowyer, Patrick Flynn, Dennis Chute, Eric Benjamin

- "Skeleton-based typing style learning for person identification" - Lior Gelberg, David Mendlovic, Dan Raviv

Coffee Break

Xiaoming Liu Michigan State University, USA

Keynote Talk: Trustworthy Face Recognition

Oral Session II

- "Supervised Contrastive Learning for Generalizable and Explainable DeepFakes Detection" - Ying Xu, Kiran Raja, Marius Pedersen

- "Myope Models - Are face presentation attack detection models short-sighted?" - Pedro C. Neto, Ana F. Sequeira, Jaime S. Cardoso

- "Semantic Network Interpretation" - Pei Guo, Ryan Farrell

Closing Session

* All times are HST - Hawaii Standard Time *

Organisers

History

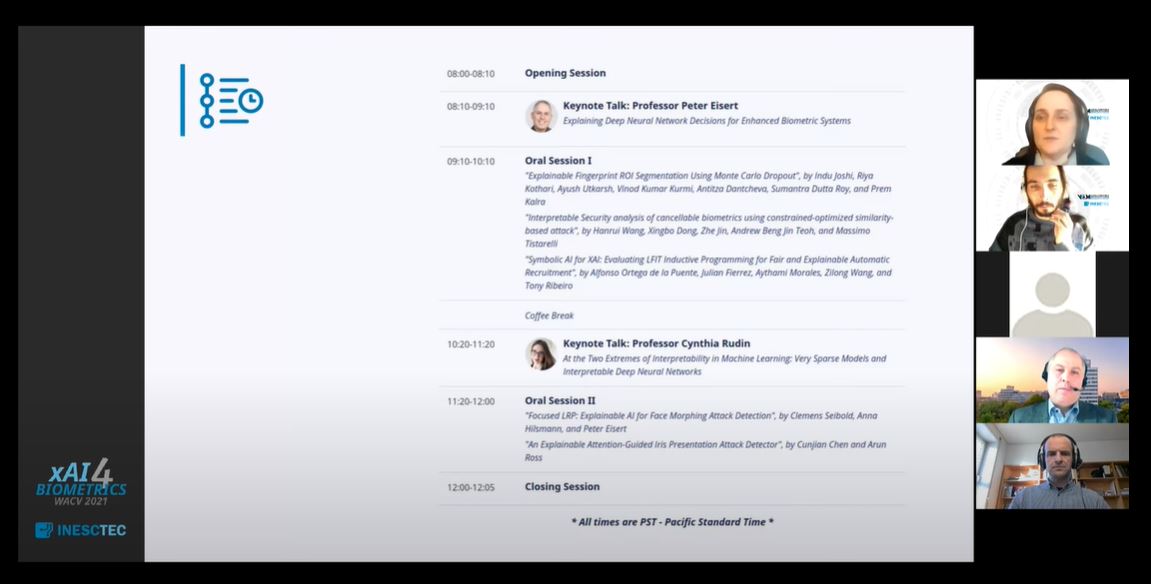

Check out some pics from the first edition of xAI4Biometrics. You may also visit the website here.

Support

Thanks to those helping us make xAI4Biometrics an awesome workshop!

Want to join this list? Check our sponsorship opportunities here!