About xAI4Biometrics

The xAI4Biometrics Workshop at ECCV 2024 intends to promote research on Explainable & Interpretable-AI to facilitate the implementation of AI/ML in the biometrics domain, and specifically to help facilitate transparency and trust. The xAI4Biometrics workshop welcomes submissions that focus on biometrics and promote the development of: a) methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities; b) quantitative methods to objectively assess and compare different explanations of the automatic decisions; c) methods to generate better explanations; and d) more transparent algorithms. xAI4Biometrics is organised by INESC TEC.

Important Info

Where? At ECCV 2024 - Milan, Italy

When? 30th of September, 2024 (Morning)

Full Paper Submission: 19th July 2024 (FIRM DEADLINE)

Author Notification: 12th August 2024

Full Paper Submission(2nd round): 19th August 2024 (FIRM DEADLINE)

Author Notification (2nd round): 26th August 2024

Camera Ready : 29th August 2024 (FIRM DEADLINE)

Registration : 5th September 2024 (FIRM DEADLINE)

Keynote Speakers

Abstract: Recently, with the emergence of deep convolutional neural networks, the research focus of face recognition has shifted to deep-learning-based approaches, dramatically boosting accuracy to above 99%. However, the recognition accuracy is not only aspect to consider when designing learning algorithms. As a growing number of applications based on face recognition have integrated into our lives, its potential for unfairness is raising alarms. The varying performance of face recognition models across different demographics leads to unequal access to services for certain groups. This talk will introduce racial bias in face recognition from both data and algorithmic perspectives. I will present the training and testing datasets used in this field, exploring the underlying causes of this bias. Additionally, various debiasing methods will be described. I will also share our efforts in developing fair face recognition models using reinforcement learning, meta-learning, and domain adaptation.

About the Speaker: Mei Wang is currently an associate professor at the School of Artificial Intelligence, Beijing Normal University. She received her Ph.D. in Information and Communication Engineering from Beijing University of Posts and Telecommunications. Her research interests include computer vision and deep learning, with a particular focus on face recognition, domain adaptation, and AI fairness. She has contributed as a reviewer for several international journals and conferences, including IEEE PAMI, IEEE TIP, IJCV, CVPR, ICCV, ECCV, and others.

Abstract: Biometric recognition is increasingly being utilized in critical verification processes, making it essential to assess the trustworthiness of such decisions and to better understand their underlying causes. Traditional explainability tools are typically designed to explain decisions—often classification decisions—made by neural networks during inference. However, biometric decisions involve comparing the results of two separate inference operations and making a decision based on this comparison, rendering conventional explainability tools less effective. Beyond the technical challenge of explaining biometric decisions, explainability is required in various forms. Questions such as “How certain am I in a verification decision?”, “How confident am I in a verification score?”, “Which areas of the images contribute the most to a positive or negative match when verifying a pair of biometric images?”, and “Which frequency bands of the images are most influential in these decisions?” are all valid and increasingly necessary to address. This talk will explore these questions and more.

About the Speaker: Dr. Naser Damer is a senior researcher at the competence center Smart Living & Biometric Technologies, Fraunhofer IGD. He received his master of science degree in electrical engineering from the Technische Universität Kaiserslautern (2010) and his PhD in computer science from the Technischen Universität Darmstadt (2018). He is a researcher at Fraunhofer IGD since 2011 performing applied research, scientific consulting, and system evaluation. His main research interests lie in the fields of biometrics, machine learning and information fusion. He published more than 50 scientific papers in these fields. Dr. Damer is a Principal Investigator at the National Research Center for Applied Cybersecurity CRISP in Darmstadt, Germany. He serves as a reviewer for a number of journals and conferences and as an associate editor for the Visual Computer journal. He represents the German Institute for Standardization (DIN) in ISO/IEC SC37 biometrics standardization committee.

Call for Papers

The xAI4Biometrics Workshop welcomes submissions that focus on biometrics and promote the development of: a) methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities; b) quantitative methods to objectively assess and compare different explanations of the automatic decisions; c) methods to generate better explanations; and d) more transparent algorithms.

Interest Topics

The xAI4Biometrics Workshop welcomes works that focus on biometrics and promote the development of:

- Methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities;

- Quantitative methods to objectively assess and compare different explanations of the automatic decisions;

- Methods and metrics to study/evaluate the quality of explanations obtained by post-model approaches and improve the explanations;

- Methods to generate model-agnostic explanations;

- Transparency and fairness in AI algorithms avoiding bias;

- Interpretable methods able to explain decisions of previously built and unconstrained (black-box) models;

- Inherently interpretable (white-box) models;

- Methods that use post-model explanations to improve the models’ training;

- Methods to achieve/design inherently interpretable algorithms (rule-based, case-based reasoning, regularization methods);

- Study on causal learning, causal discovery, causal reasoning, causal explanations, and causal inference;

- Natural Language generation for explanatory models;

- Methods for adversarial attacks detection, explanation and defense ("How can we interpret adversarial examples?");

- Theoretical approaches of explainability (“What makes a good explanation?”);

- Applications of all the above including proof-of-concepts and demonstrators of how to integrate explainable AI into real-world workflows and industrial processes.

You can download the official call for papers here!

Don't forget to submit your paper by 19th of July 2024. All submitted papers should use the official ECCV 2024 template. For more guidelines please see the ECCV submission policies.

Registration Information

Each accepted paper for the xAI4Biometrics workshop must be covered by a "2-Day Workshop" registration, which is mandatory and cannot be replaced by a "2-Day Workshop student" or any other registration type. A single "2-Day Workshop" registration allows you to register up to two workshop papers. Authors are required to complete their registration by 5 September 2024 to ensure their paper's inclusion in the workshop. Additionally, while remote presentations are permitted, the presenting author must still have their paper covered by a "2-Day Workshop" registration.

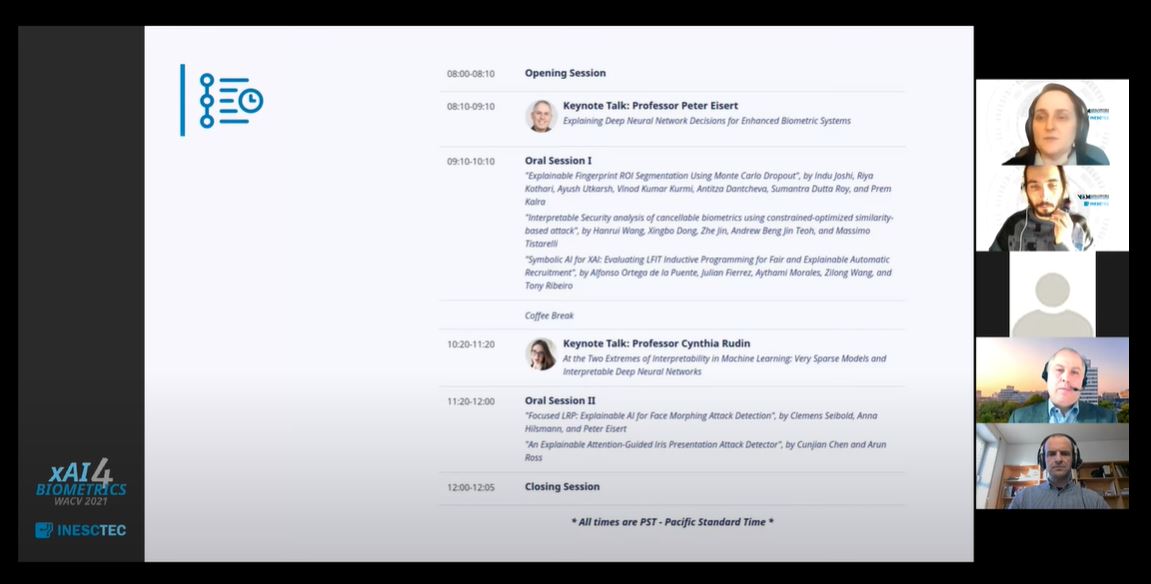

Programme

Opening Session

Naser Damer Darmstadt University, Germany

Keynote Talk: Explaining Relations: Biometric Matching Explainability Beyond the Classical Classification

Oral Session I

- "How were you created? Explaining synthetic face images generated by diffusion models" - Bhushan S. Atote

- "FaceOracle: Chat with a Face Image Oracle" - Wassim Kabbani

Coffee Break

Mei Wang Beijing Normal University, China

Keynote Talk:Towards Fairness in Face Recognition

Oral Session II

- "Evaluation Framework for Feedback Generation Methods in Skeletal Movement Assessment" - Tal Hakim

- "How to Squeeze An Explanation Out of Your Model" - Tiago Roxo

- "Frequency Matters: Explaining Biases of Face Recognition in the Frequency Domain" - Marco Huber

- "Makeup-Guided Facial Privacy Protection via Untrained Neural Network Priors" - Fahad Shamshad

Closing Session

* All times are CEST (Central European Summer Time) *

Organisers

Support

Thanks to those helping us make xAI4Biometrics an awesome workshop!

Want to join this list? Check our sponsorship opportunities (to be added shortly)!

Advancing Transparency and Privacy: Explainable AI and Synthetic Data in Biometrics and Computer Vision

The 4TH Workshop on Explainable & Interpretable Artificial Intelligence for Biometrics has partnered with the Image and Vision Computing Journal to create a Special Issue that promotes research on the use of Explainable AI and Synthetic Data to enhance transparency and privacy in Biometrics and Computer Vision. Find more details on this Special Issue here.