About xAI4Biometrics

The xAI4Biometrics Workshop at WACV 2023 intends to promote research on Explainable & Interpretable-AI to facilitate the implementation of AI/ML in the biometrics domain, and specifically to help facilitate transparency and trust. The xAI4Biometrics workshop welcomes submissions that focus on biometrics and promote the development of: a) methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities; b) quantitative methods to objectively assess and compare different explanations of the automatic decisions; c) methods to generate better explanations; and d) more transparent algorithms. xAI4Biometrics is organised by INESC TEC.

The xAI4Biometrics Workshop will be run remotely. We will do our best to guarantee that the workshop runs smoothly. We expect to be able to repeat the good experience that we had in WACV 2021 and 2022 when everything was remote and the feedback we received from the participants was very positive. Each workshop will have its own zoom channel and all the talks (keynote and paper presentations) will be given through that platform. If you have any question please do not hesitate in contacting us.

Important Info

Where? At WACV 2023, Online

When? January 7, 2023

Full Paper Submission: 11 October 2022 25 October 2022

Author Notification: 11 November 2022

Camera Ready & Registration: 19 November 2022

Keynote Speakers

Abstract: Biometrics refers to the use of physical and behavioral traits such as fingerprints, face, iris, voice and gait to recognize an individual. The biometric data (e.g., a face image) acquired from an individual may be modified for several reasons. While some modifications are intended to improve the performance of a biometric system (e.g., image enhancement), others may be intentionally adversarial (e.g., spoofing or obfuscating an identity). Furthermore, the data may be subjected to a sequence of alterations resulting in a set of near-duplicate data (e.g., applying a sequence of image filters to an input face image). In this talk, we will discuss methods for (a) detecting altered biometric data; (b) determining the relationship between near-duplicate biometric data and constructing a phylogeny tree denoting the sequence in which they were transformed; and (c) using altered biometric data to enhance privacy.

About the Speaker: Arun Ross is the Martin J. Vanderploeg Endowed Professor in the College of Engineering and a Professor in the Department of Computer Science and Engineering. He also serves as the Site Director of the NSF Center for Identification Technology Research (CITeR). He received the B.E. (Hons.) degree in Computer Science from BITS Pilani, India, and the M.S. and PhD degrees in Computer Science and Engineering from Michigan State University. He was in the faculty of West Virginia University between 2003 and 2012 where he received the Benedum Distinguished Scholar Award for excellence in creative research and the WVU Foundation Outstanding Teaching Award. His expertise is in the area of biometrics, computer vision and machine learning. He has advocated for the responsible use of biometrics in multiple forums including the NATO Advanced Research Workshop on Identity and Security in Switzerland in 2018. He testified as an expert panelist in an event organized by the United Nations Counter-Terrorism Committee at the UN Headquarters in 2013. Ross is a recipient of the NSF CAREER Award. He was designated a Kavli Fellow by the US National Academy of Sciences in 2006. In recognition of his contributions to the field of pattern recognition and biometrics, the International Association of Pattern Recognition (IAPR) honored him with the prestigious JK Aggarwal Prize in 2014 and the Young Biometrics Investigator Award in 2013.

Abstract: Face recognition systems are spreading worldwide and have a growing effect on our daily life. Since these systems are increasingly involved in critical decision-making processes, such as in forensics and law enforcement, there is a growing need in making the face recognition process explainable to humans. Previous works on explainable artificial intelligence for biometrics focused either on making the matching decisions or the decisions from presentation attack detection understandable. Contrarily, this work aims at making the utility of face images for recognition explainable for humans before it is used for matching. More precisely, a model-agnostic and training-free approach is introduced to determine the utility of single pixels in an image for recognition. The utility of single pixels can help to (a) explain why an image cannot be used as a reference image during the enrolment process, (b) enhance recognition performance by inpainting or merging low-quality areas of the face to create images of higher utility, and (c) better understand face image quality assessment and the behavior of face recognition models.

About the Speaker: Philipp Terhörst is a research group leader at Paderborn University working on “Responsible AI for Biometrics”. He received his Ph.D. in computer science in 2021 from the Technical University of Darmstadt for his work on “Mitigating Soft-Biometric Driven Bias and Privacy Concerns in Face Recognition Systems” and worked at the Fraunhofer IGD from 2017 to 2022. He was also an ERCIM fellow at the Norwegian University of Science and Technology funded by the European Research Consortium for Informatics and Mathematics. His interest lies in responsible machine learning algorithms in the context of biometrics. This includes the topics of fairness, privacy, explainability, uncertainty, and confidence. Dr. Terhörst is the author of several publications in conferences and journals such as CVPR and IEEE TIFS and regularly works as a reviewer for e.g. TPAMI, TIP, PR, BTAS, ICB. For his scientific work, he received several awards such as from the European Association for Biometrics and the International Joint Conference for Biometrics. He furthermore participated in the ’Software Campus’ Program, a management program of the German Federal Ministry of Education and Research (BMBF).

Call for Papers

The xAI4Biometrics Workshop welcomes submissions that focus on biometrics and promote the development of: a) methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities; b) quantitative methods to objectively assess and compare different explanations of the automatic decisions; c) methods to generate better explanations; and d) more transparent algorithms.

Interest Topics

The xAI4Biometrics Workshop welcomes works that focus on biometrics and promote the development of:

- Methods to interpret the biometric models to validate their decisions as well as to improve the models and to detect possible vulnerabilities;

- Quantitative methods to objectively assess and compare different explanations of the automatic decisions;

- Methods and metrics to study/evaluate the quality of explanations obtained by post-model approaches and improve the explanations;

- Methods to generate model-agnostic explanations;

- Transparency and fairness in AI algorithms avoiding bias;

- Interpretable methods able to explain decisions of previously built and unconstrained (black-box) models;

- Inherently interpretable (white-box) models;

- Methods that use post-model explanations to improve the models’ training;

- Methods to achieve/design inherently interpretable algorithms (rule-based, case-based reasoning, regularization methods);

- Study on causal learning, causal discovery, causal reasoning, causal explanations, and causal inference;

- Natural Language generation for explanatory models;

- Methods for adversarial attacks detection, explanation and defense ("How can we interpret adversarial examples?");

- Theoretical approaches of explainability (“What makes a good explanation?”);

- Applications of all the above including proof-of-concepts and demonstrators of how to integrate explainable AI into real-world workflows and industrial processes.

The workshop papers will be published in IEEE Xplore as part of the WACV 2023 Workshops Proceedings and will be indexed separately from the main conference proceedings. The papers submitted to the workshop should follow the same formatting requirements as the main conference. For more details and templates, click here.

Don't forget to submit your paper by 11 October 2022 25 October 2022.

Programme

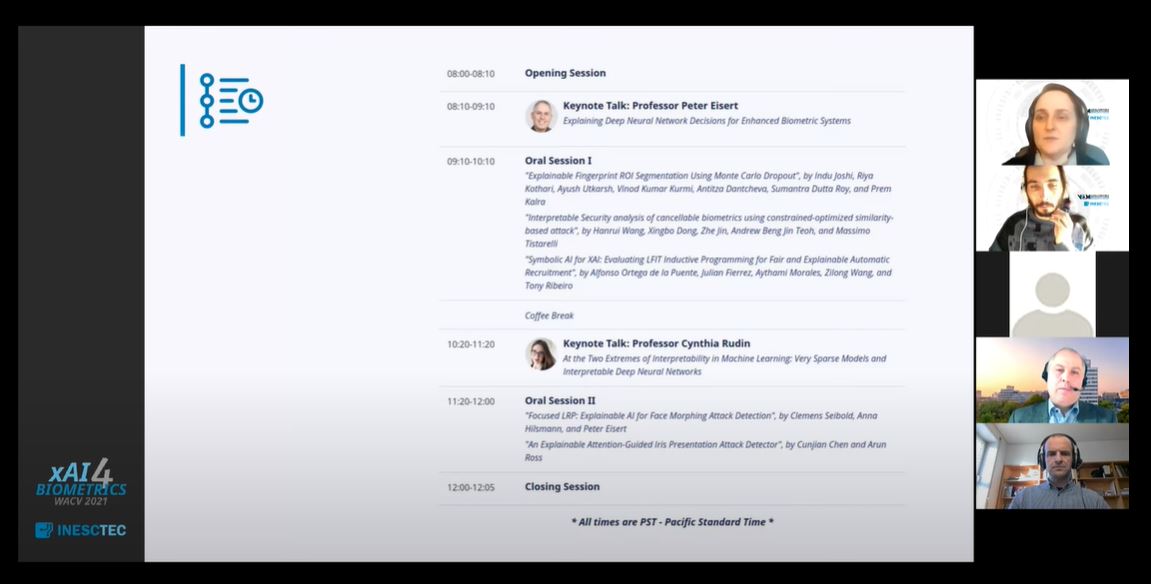

Opening Session

Arun A. Ross Michigan State University, USA

Keynote Talk: Fairness and Integrity: Detecting Altered Biometric Data

Oral Session I

- "Learning Pairwise Interaction for Generalizable DeepFake Detection" - Ying Xu, Kiran Raja, Luisa Verdoliva, Marius Pedersen

- "A Principal Component Analysis-Based Approach for Single Morphing Attack Detection" - Laurine Dargaud, Mathias Ibsen, Juan Tapia, Christoph Busch

- "Explainable Model-Agnostic Similarity and Confidence in Face Verification" - Martin Knoche, Gerhard Rigoll, Stefan Hörmann, Torben Teepe

Coffee Break

Philipp Terhörst Paderborn University, Germany

On the Utility of Single Pixels for Explainable Face Recognition

Oral Session II

- "Finger-NestNet: Interpretable Fingerphoto Verification on Smartphone using Deep Nested Residual Network" - Raghavendra Ramachandra, Hailin Li

- "Human Saliency-Driven Patch-based Matching for Interpretable Post-mortem Iris Recognition" - Aidan Boyd, Daniel Moreira, Andrey Kuehlkamp, Kevin Bowyer, Adam Czajka

Closing Session

* All times are HST - Hawaii Standard Time *

Organisers

Support

Thanks to those helping us make xAI4Biometrics an awesome workshop!

Want to join this list? Check our sponsorship opportunities here!

The project TAMI - Transparent Artificial Medical Intelligence (NORTE-01-0247-FEDER-045905) partially funding this event is co-financed by ERDF - European Regional Development Fund through the North Portugal Regional Operational Program - NORTE 2020 and by the Portuguese Foundation for Science and Technology - FCT under the CMU - Portugal International Partnership.